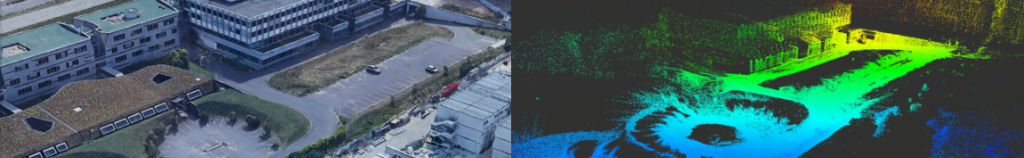

In the context of mobile robotics, my research focuses on scene understanding through embedded multi-modal perception systems. This complex problem is crucial for high-impact societal applications, such as Intelligent Transportation Systems, particularly in Advanced Driver Assistance Systems (ADAS) and Autonomous Vehicles.

Key Research Areas

Simultaneous Localization and Mapping (SLAM)

- Multimodal loop-closure fusion

- HOOFR SLAM Vehicle Localization

- Machine learning and AI-based algorithms

- Hardware/software co-design

Context-Aware Multi-Modal Perception

- Calibration-free data association

Computer Vision

- Multiple-view geometry

- Scene motion reconstruction

Filtering and Multiple Target Tracking

- Bayesian approaches

- Multi-sensor data fusion

- Data association

Ongoing Works for industrial applications & academic studies such as

- Embedded rail defect detection system

- Trajectory prediction systems for autonomous vehicles

- Rider behavior characterization using gaze analysis